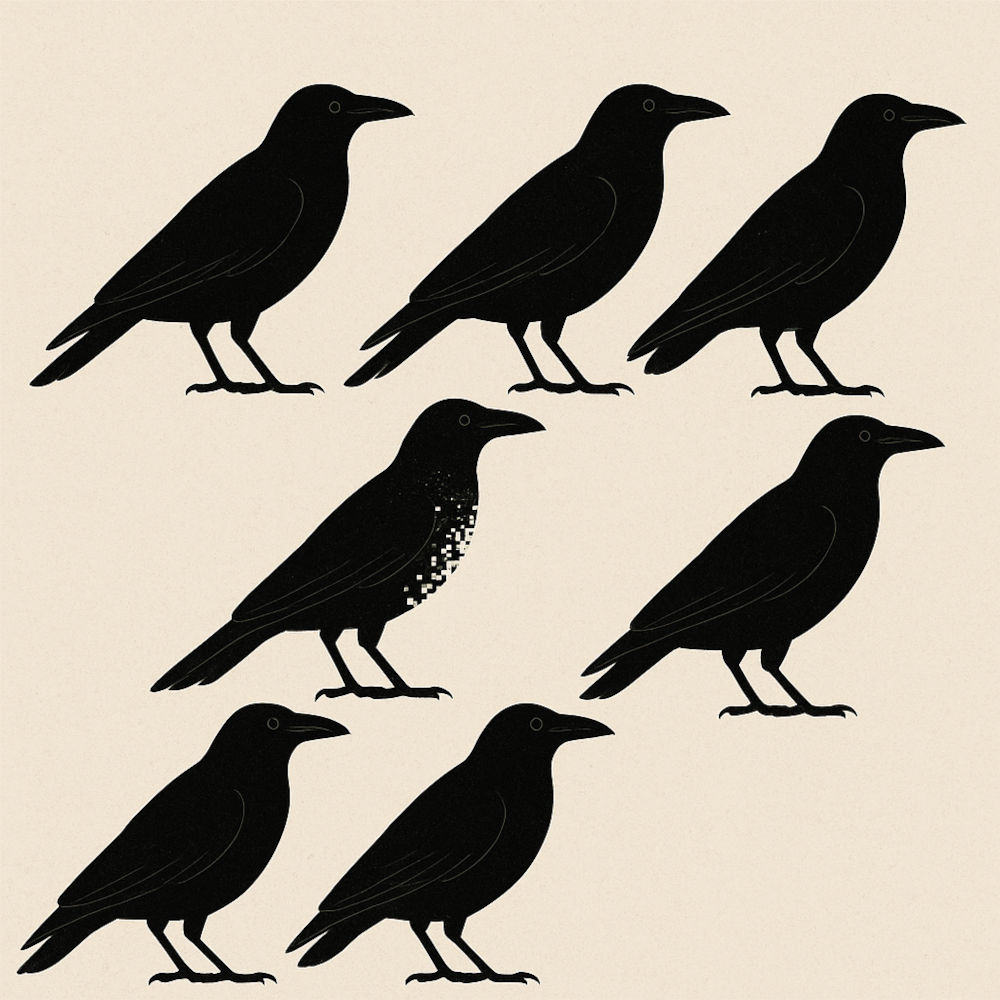

[To an untrained eye, all crows look the same. So do algorithms that learn from narrow data.]

To the untrained eye, all crows look alike. But they aren’t. Each has its own tone, call and character. When we fail to notice those differences, we suffer from what I call the “crow syndrome”.

In the age of Artificial Intelligence, this blindness isn’t just a human quirk. It’s becoming a design flaw in the machines that now shape our lives.

When AI can’t tell one crow from another

Across global enterprises, AI systems are learning from data that often reflects only one reality.

Take something as mundane as closing a company’s books. A CFO in New York must report by December 31. Her counterpart in Mumbai does it on March 31. If an AI system has been trained mostly on American data, it will assume all CFOs work to a December year-end. When that model is applied in India, it struggles—because it can’t tell one crow from another.

That’s what happens when algorithms learn from narrow or homogeneous datasets. They begin to see the world in grayscale.

Why AI inherits our blindness

But this isn’t just a problem of limited data—it’s a reflection of something deeper. Machines don’t develop prejudice on their own; they inherit ours. The datasets we feed them are mirrors of the world as we see it—shaped by who collects the data, who labels it, and who gets left out. Every gap in that data becomes a blind spot in the algorithm. When we fail to see diversity in ourselves, our systems simply learn to ignore it faster.

What psychology calls “cross-race effect”

Psychologists have long noted that we recognise faces of our own race more easily than those of others—a bias known as the cross-race effect. When it comes to “out-groups”, our minds tend to blur the differences.

AI systems behave the same way. When trained mostly on data from one population or geography, they grow blind to the rest. The result is digital discrimination: machines that can’t tell one face, accent, or cultural nuance from another.

When bias becomes consequence

Consider Detroit in 2020. Robert Williams, a Black man, was arrested because a facial-recognition system wrongly identified him. The algorithm had been trained largely on white faces. The same crow syndrome that makes humans overlook difference was now encoded in software—and it put an innocent man in jail.

A similar case in the UK—Ed Bridges v. South Wales Police—led the Court of Appeal to rule facial recognition unlawful, partly because it hadn’t been tested for race and gender bias. Even in the corporate world, bias plays out quietly: AI tools screening resumes have preferred “white-sounding” names, and customer service bots have been known to misread dialects as negative sentiment.

Such bias shows up everywhere: in job screenings, in healthcare algorithms that under-diagnose certain skin tones, even in the way AI art tools define beauty. Ask an image generator to “draw a beautiful woman by a river” and you’ll likely see the same slim, white figure repeated endlessly.

Why this matters

When AI can’t see difference, three things follow.

Injustice. Wrongful arrests, skewed hiring, unfair lending, or misdiagnosis.

Erosion of trust. Communities that are misrecognised lose faith in technology meant to serve them.

Reinforced inequality. Systems that already favour the powerful get even better at serving them.

Bias in data becomes bias in outcomes—faster, wider, harder to reverse.

How to teach AI to see better

There’s no magic fix, but we know what helps.

Diverse and representative data. Training datasets must include balanced samples across race, gender, geography and body types.

Transparency and oversight. Regulators and enterprises need clear guardrails and public accountability.

Continuous testing. Bias audits and model updates must be routine, not reactive.

Netflix’s documentary Humans in the Loop captures this tension beautifully—showing that AI’s greatest flaw isn’t malice but indifference. It mirrors what it sees.

A call to those who build, use, and govern AI

We can’t leave AI to see the world through a monochrome lens.

If we stop correcting its errors, it will stop learning—much like a map app that never knows a new road has opened because no one reports it.

To enterprise leaders: look beyond customer KPIs; the world your algorithms shape is the one your families will live in.

To policymakers: mandate diversity in data before bias becomes law.

To all of us: give feedback, speak up, teach our tools to see the full spectrum of humanity.

Looking ahead

The next wave of AI ethics will look beyond accuracy and fairness. As AI systems become embedded in governance, finance, and education, these questions will only grow sharper. It will ask tougher questions: Who defines the data? Whose worldview gets encoded? These aren’t technical questions—they’re moral ones. As AI regulation gathers pace across the world, the real test will be whether we can design systems that not only recognise difference, but respect it.

Seeing every crow

It feels like a moral obligation to warn society before we cross a tipping point. Some argue we already have. Yet the optimist in me believes in course correction.

So here’s a small plea: let’s learn to paint every kind of crow—from Rooks to Ravens—each distinct, intelligent, and worthy of being seen.

Sunil Malhotra on Nov 12, 2025 7:29 a.m. said

When human civilisation itself is steeped in bias, it is hypocritical—if not impossible—to expect AI to be pure. AI is merely a mirror: clear, reflective, and brutally accurate, showing us who we truly are. Unless we, as humans, make a conscious pledge to change—and practice what we preach—it is delusional to believe we can contain the speed and scale at which technology learns to imitate us.

Ethics in AI needs to be addressed at different stages of the AI lifecycle. First, decisions about what to design and develop should leverage the innate wisdom of humankind, ensuring that technologies align with human values and societal needs. Second is how to deploy AI to maximise human and planetary well-being, implementing strategies that avoid harm and promote sustainability. Finally, to promote multicultural harmony, control over AI’s use should be ceded to its potential beneficiaries, empowering them to determine when, where, and how AI is utilised in ways that best serve their unique contexts.